This landed in my inbox this morning and I had such fun reading it (I’d seen the project and cease and desist letter at Transmediale on Sunday), I thought I’d post it here in case it introduces other people to this beautifully ironic hommage to facebook (about which you probably know my feelings).

Press Release, February 10th, 2010. Somewhere in Europe.

* Face to Facebook.

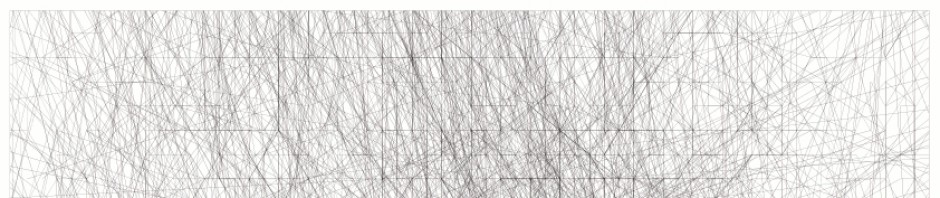

https://face-to-facebook.net Face to Facebook is a project by Paolo Cirio and Alessandro Ludovico, who wrote special software to steal 1 million public profiles from Facebook, filtering them through face-recognition software and posting the resulting 250,000 profiles (categorized by facial expression) on a dating website called Lovely-Faces.com.

The project was launched at Transmediale, the annual festival for art and digital culture in Berlin, on February 2nd, in the form of installation displaying a selection of 1,716 pictures of unaware Facebook users, an explanatory video and a diagram detailing the whole process. The Lovely-Faces.com website went online on the same day.

* The Global Mass Media Hack Performance.

On February 3rd a global media performance started with a few epicenters that after a few days had involved Wired, Fox News, CNN, Msnbc, Time, MSN, Gizmodo, Ars Technica, Yahoo News, WSB Atlanta TV, San Francisco Chronicle, The Globe and Mail, La Prensa, AFP, The Sun, The Daily Mail, The Independent, Spiegel Online, Tagesschau TV News, Sueddeutsche, Der Standard, Liberation, Le Soir, One India News, Bangkok Post, Taipei Times, News24, The Age, Brisbane Times and dozens of others. It was a “perfect news” for the hectic online world: it was about a service used by 500.000.000 users and it potentially affected all of them. Even more importantly, it boosted our inherent fear of not being able to control what we do through our connected screens. Exquisitely put by Time: “you might be signed up for Lovely-Faces.com’s dating services and not even know it.” At the end of the day Cirio’s and Ludovico’s Facebook accounts were disabled and a “cease and desist” letter from Perkins Coie LLP (Facebook lawyers) landed in their inboxes, including a request to give back to Facebook “their data”. We can properly define it as a performance since it happened in a short time span, involved the audience in a trasformation, and evolved into a thrilling story. The frenzied pace of these digital events was almost bearable.

* The Social Experiment.

In the subsequent days the media performance continued at a very fast pace and what we still define as a “social experiment” was actually quite successful. Starting on February 4th the news went spontaneously viral: thousands of tweets and retweets pointed to the Lovely-Faces.com website or to articles and blog posts, often urging people to check if they (and their loved ones) were on the website or not. In a few days Lovely-faces.com received 964.477 page views from 195 different countries. Reactions varied from asking to be removed (which we diligently did) to asking to be included, from anonymous death threats to proposals of commercial partnerships.

* Back to Facebook.

We approached the Electronic Frontier Foundation about legal counsel, but after a second warning by Perkins Coie, we temporarily put up a notice that Lovely-Faces.com is under maintenance. But they are not ok with that.They want Lovely-Faces.com not to be reachable. And they even want the same for Face-to-Facebook.net, the website where we explain the project. So basically their current aim is to completely remove the web presence of this artistic project and social experiment. They missed out on Face-to-Facebook also being meant as a homage to FaceMash, the system Mark Zuckerberg established by scraping the names and photos of fellow classmates off school servers, which was the very first Facebook. Furthermore, it’s a bit funny hearing Facebook complain about the scraping of personal data that are quasi-public and doubtfully owned exclusively by Facebook (as a Stanford Law School Scholar wondered analyzing Lovely-Faces.com). We obtained them through a script that never even logged in their servers, but only very rapidly “viewed” (and recorded) the profiles. Finally, and paradoxically enough, Facebook has blocked us from accessing our Facebook profiles, but all the data we posted in the last years is still there. This proves once

more that they care much more about the data you post than your

online identity.

We’re going to reclaim the access to our Facebook accounts, and the right to express and document our work on our own websites. And even if we are forced to go offline, Lovely-Faces.com will never go offline in the minds of involved people.

Face to Facebook data:

People who asked to be removed from the database: 56

People who asked to be included in the database: 14

Commercial dating website partnership proposals: 4

Other partnership proposals: 9

Cease and desist letters by Perkins Coie LLP (Facebook lawyers): 1

Other threatened lawsuits or class actions: 11

Anonymous email death threat: 5

TV reports: 3

Online news about Lovely-Faces.com (source: Google News): 427

Number of times “lovely faces” introductory video has been viewed on

you tube: 31,089

Unique users on Lovely-Faces.com: 211.714

Face to Facebook links (a few):

Fox news LA (video)

https://www.myfoxla.com/dpp/lifestyle/facebook-profiles-scraped-for-fake-dating-site-20110207

WSBTV 2 (video)

https://www.wsbtv.com/news/26781527/detail.html

Tagesschau (video, in German)

Wired.com

https://www.wired.com/epicenter/2011/02/facebook-dating/

Stanford Law School / The Center for Internet and Society

https://cyberlaw.stanford.edu/node/6613

Face to Facebook

https://www.face-to-facebook.net/contact.php

— Alessandro Ludovico – Neural – (https://neural.it/)